By Nikita Johnson, Founder – RE•WORK

January 18, 2017

Today’s solutions that seek to emulate the human mind waste time and energy moving data around. That is not what the human mind does – rather than move data around, it efficiently processes data in place.

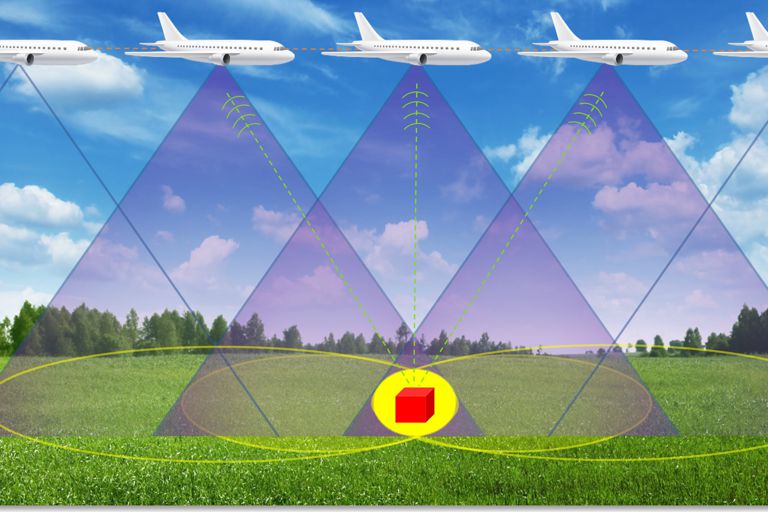

Avidan Akerib, VP of the Associative Computing at GSI Technology has a PhD in Applied Mathematics and Computer Science and holds more than 30 patents related to in-place associative computing. Avidan will be presenting at the Deep Learning Summit in San Francisco, 26-27 January and will detail an in-place associative computing technology that changes the concept of computing from serial data processing, where data is moved back and forth between the processor and memory, to massive parallel data processing, compute, and search in-place directly in the memory array, much like the way the human mind works.

We chatted to Avidan ahead of the Deep Learning Summit to learn more about in-place associative computing, the key challenges to further advancements in high performance memory, how AI will assist with pattern recognition in the future and Avidan’s predictions for Deep Learning in 2017.

Can you give us an overview to ‘in-place associative computing’?

| CPU/GPGPU (Current solutions) | In-Place Computing |

| 1. Send an address to memory | Search by content |

| 2. Fetch the data from memory and send it to the processor | Mark in place |

| 3. Compute serially per core (thousands of cores at most) | Compute in place on millions of processors (the memory itself becomes millions of processing elements.) |

| 4. Write the data back to memory, further wasting IO resources | No need to write data back—the result is already in the memory |

| 5. Send data to each location that needs it | If needed, distribute or broadcast at once |

In-place associative computing removes the bottleneck at the I/O between the processor and memory, resulting in significant performance-over-power ratio improvement compared to conventional methods that use CPU and GPGPU (General Purpose GPU) along with DRAM. It turns the memory array into a massively parallel processor (millions of processors).

Why is it important to move from serial data processing to massive parallel data processing?

First, serial data processing limits performance and wastes power by transferring data back and forth across an I/O bus. With GSI’s APU technology, data is processed in place without having to send data back and forth across the I/O bus. Second, what is viewed as “massive parallel data processing” today is actually parallel processing on a small scale.

GSI changes the concept of “massive parallel data processing” by essentially turning every memory cell that holds data into a processor. This allows for “massive parallel data processing” on a completely different scale. Instead of the thousands of processors as currently seen with GPGPUs, the APU allows for millions of parallel processors on a chip.

GSI also allows additional instruction sets to the ALU (arithmetic logic unit), such as searching by content, selective write to specific cells, and distributing a value to multiple cells at once. These new instructions, together with parallel ALU instructions (such as FP), allow for a wide range of data mining applications to be handled in real time with low power.

What are the key challenges to further advancements in high performance memory?

I believe that advances in high performance memory will come in multiple phases.

• The first phase needs to make storage more “intelligent”. Today’s storage simply holds the data. We need to replace “dumb cache” with “intelligent cache”

that preprocesses for the main processor (CPU or GPGPU). This will increase overall performance and reduce power.

• The second phase replaces the main processor with an associative processor.

• The third phase replaces all storage (volatile and non-volatile) in data centers with associative storage.

What applications/industries do you support memory products for at GSI?

Target applications for GSI’s APU include convolutional neural networks, recommender systems, data mining, medical diagnostics, biotechnology, finance, cyber security, etc.

The APU will allow similarity search for big data to improve by orders of magnitude and advanced deep learning algorithms to execute much better. It will also open up the possibilities for entirely new algorithms.

How do you see AI assisting with pattern recognition in the future?

Data is growing rapidly due to applications such as IoT, social networking, and AI as it applies to image recognition, voice processing, natural language processing, and recommender systems. Current AI solutions will not scale with this data growth.

What is needed is for AI to integrate directly with the data itself. That is how the human brain works to see patterns and make intuitive leaps.

What are your predictions for Deep Learning in 2017?

Unfortunately, 2017 may prove somewhat disappointing because much of today’s amazing progress will likely start to hit a brick wall. Our industry will start to realize that we need to break some of the limitations of the current direction. “More is better” works to a certain point, but it will not be enough going forward.

Beyond 2017 we will start to see new solutions that will be able to scale with the orders of more data. We will see new algorithms and applications that take advantage of new conceptual frameworks such as associative processing.